Building Robust Predictive Systems for Tabular Data and Sequential Data

🧑💻 Zhipeng “Zippo” He @ School of Information Systems

Queensland University of Technology

- XAMI LAB

- zhipeng.he@hdr.qut.edu.au

- github.com/ZhipengHe

- zhipenghe.me

IS Doctoral Consortium 2023

November 23, 2023

Supervisory Team

A/Prof. Chun Ouyang

Prof. Alistair Barros

A/Prof. Catarina Moreira (UTS)

\[ \DeclareMathOperator*{\argmin}{arg\,min} $\newcommand{\one}{\unicode{x1d7d9}}$ "\uD54F" \]

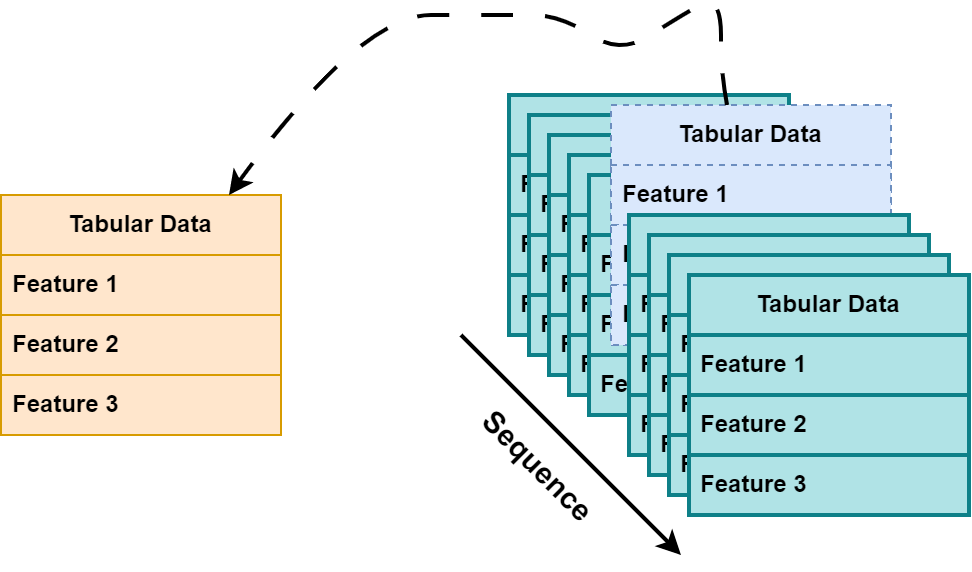

ML for Tabular & Sequential Data

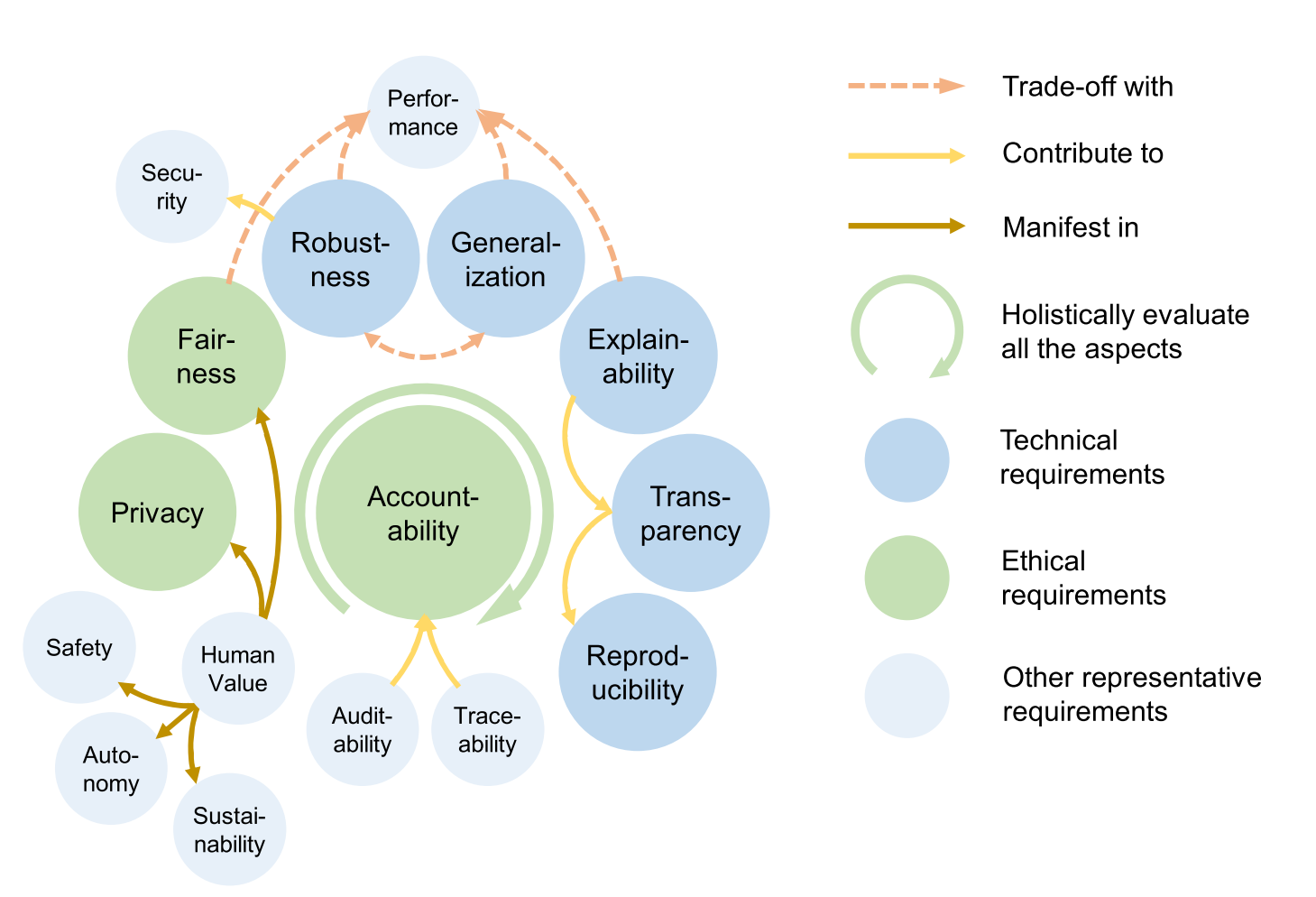

Trustworthy AI: Beyond Accuracy

Adversarial Robustness

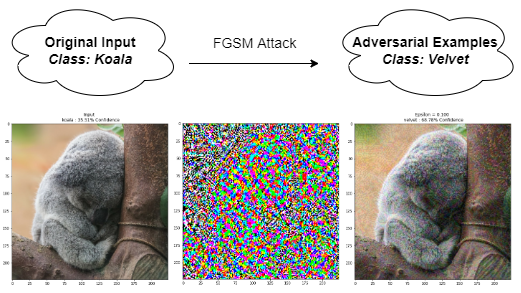

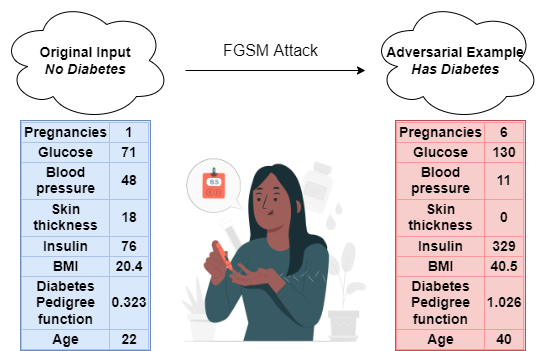

What are Adversarial Attacks?

Adversarial examples are specialised inputs created with the purpose of confusing a neural network, resulting in the misclassification of a given input. These notorious inputs are indistinguishable to the human eye but cause the network to fail to identify the contents of the image. (Goodfellow, Shlens, and Szegedy 2015)

- Mislead the prediction 😊

- Similarity ❓ \(\Longrightarrow\) What makes a good adversarial attack?

Input Data: Image VS Tabular (Mathov et al. 2022)

Image (unstructured):

- High-dimensional

- Homogeneous

- Continuous & consistent features

Tabular (structured):

- Low-dimensional

- Heterogeneous

- Numerical & categorical features

- Feature dependencies

Challenges in Tabular Data (Borisov et al. 2022)

Different feature ranges and feature types

Missing or complex irregular spatial dependencies exist in correlation

Information loss may happen when pre-processing features with dependency

Changing a single feature can entirely flip a prediction on tabular data

What Makes A Good Attack

Effectiveness

Imperceptibility

Transferability

- Performance

- Measure the impact on models

- Indistinguishable to human observers

- Assess through knowledge in datasets

- Transfer to other models

- Transfer to other datasets

What Makes A Good Attack

Effectiveness

Imperceptibility

Transferability

In our research…

Effectiveness

Imperceptibility

Transferability

- Attack Success Rate

- Sparsity

- Proximity

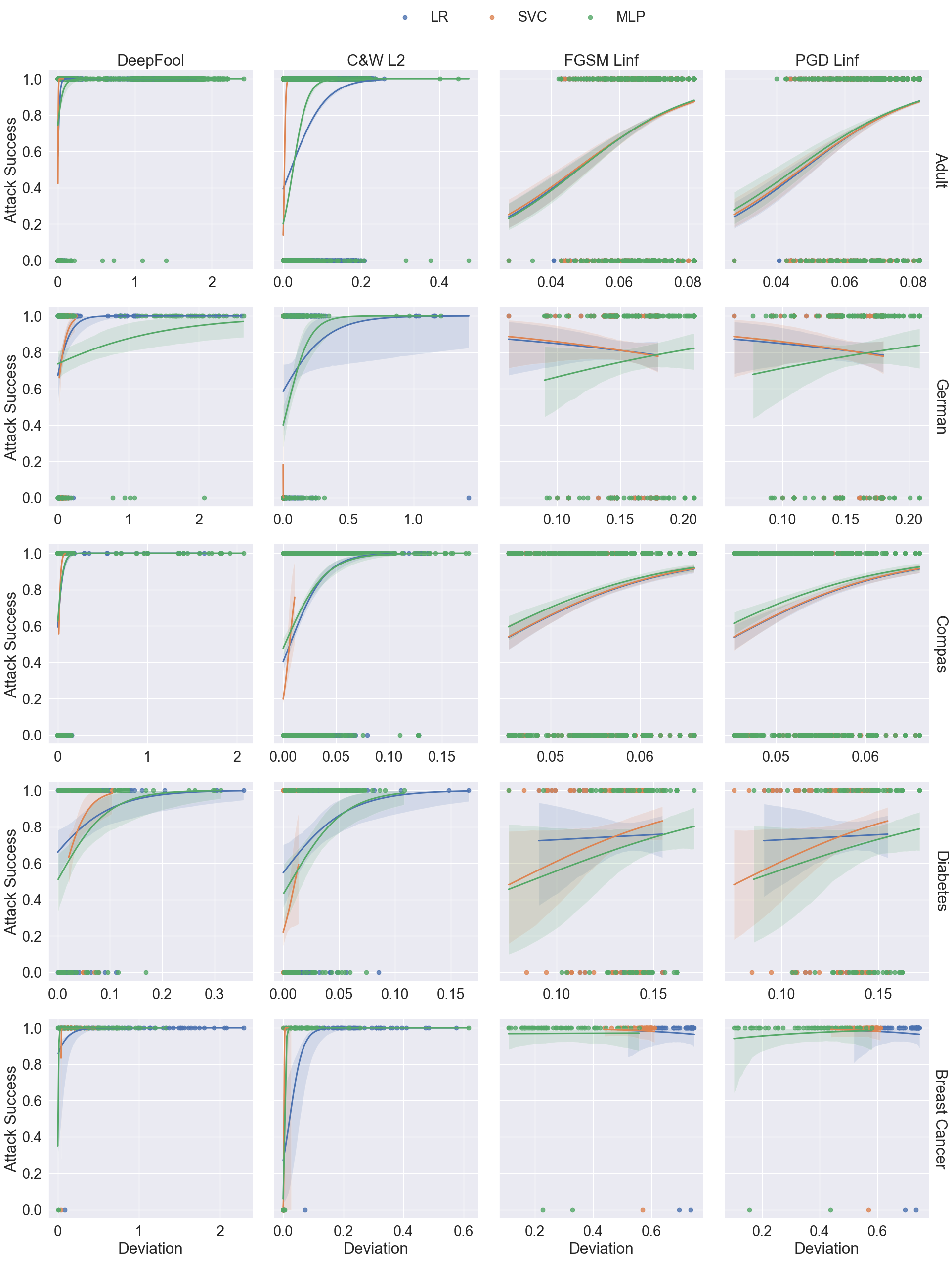

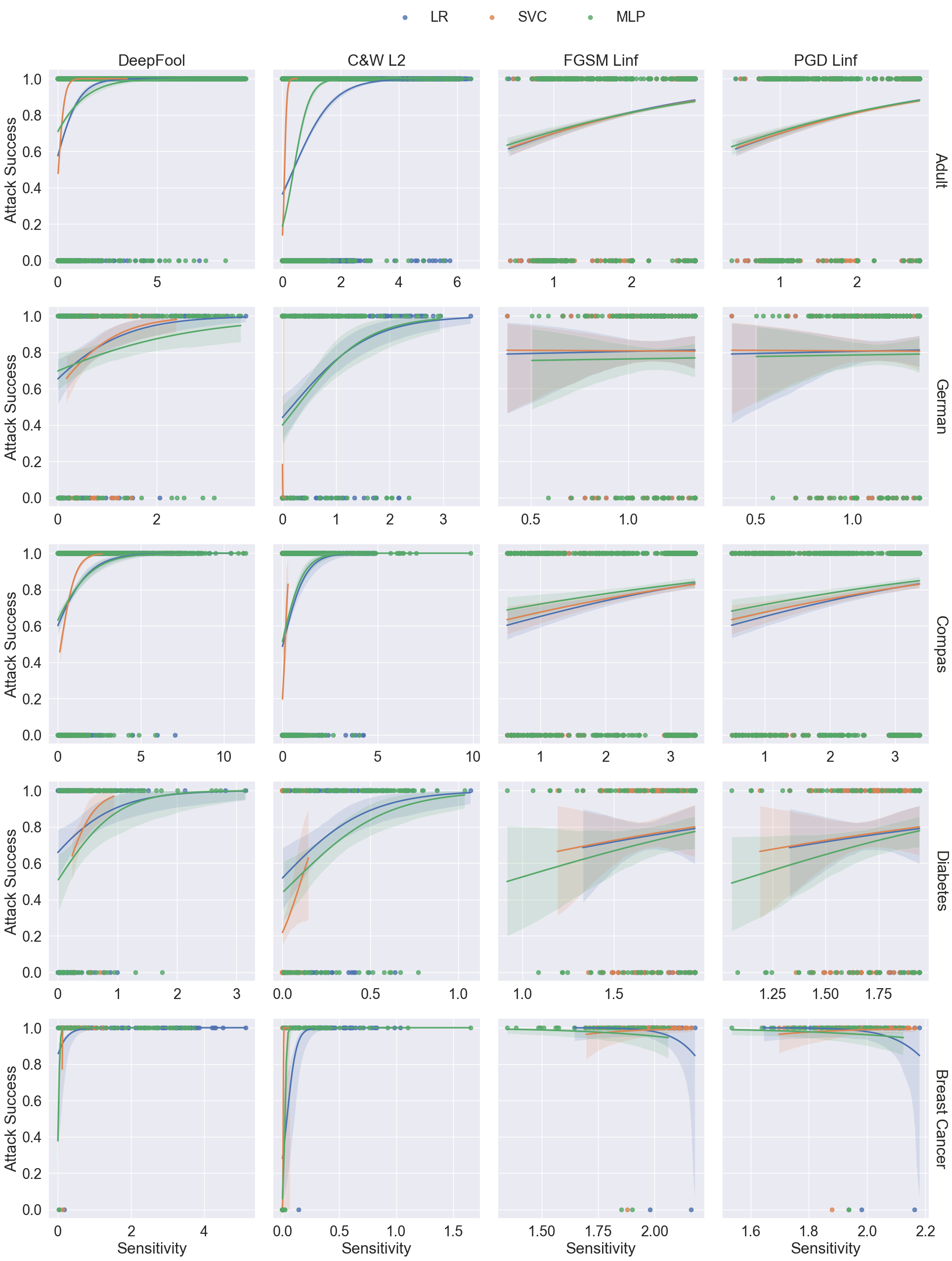

- Deviation

- Sensitivity

- Fesibility

- Immutability

- Future Work

Formalise Adversarial Problems

Given a machine learning classifier \(f: \mathbb{X}\to \mathbb{Y}\) mapping data instance \(\boldsymbol{x} \in \mathbb{X}\) to label \(y \in \mathbb{Y}\), an adversarial example \(\boldsymbol{x}^{adv}\) generated by an attack algorithm is a perturbed input similar to \(\boldsymbol{x}\), where \(\boldsymbol{\delta}\) denotes input perturbation. \[ \boldsymbol{x}^{adv} = \boldsymbol{x} + \boldsymbol{\delta} \hspace{0.8em}\text{subject to } f(\boldsymbol{x}^{adv})\neq y \]

\[Bounded Attack: \max{\mathcal{L}(f(\boldsymbol{x}^{adv}),y)} \hspace{0.8em}\text{subject to } \Vert\boldsymbol{\delta}\Vert \leq \eta \]

\[ Unbounded Attack: \min{\Vert\boldsymbol{\delta}\Vert} \hspace{0.8em}\text{subject to } f(\boldsymbol{x}^{adv})\neq y \]

- Perturbation measured by distance metrics

Effectiveness Metrics: Success Rate

The success rate of an adversarial attack is the percentage of input samples that are successfully manipulated to cause misclassification by the model. \[ \text{Attack Success Rate} = \frac{1}{n}\sum_{i=1}^{n}\one( \boldsymbol{x}^{adv}\neq y) \]

Imperceptibility Property: Sparsity

A good adversarial example is expected to perturb fewer features that will result in changing the model’s prediction.

Here, I adapt \(\ell_0\) norm (Croce and Hein 2019) to tabular data as sparsity metric, which measures the number of changed features in an adversarial example \(\boldsymbol{x}^{adv}\) compared to the original input vector \(\boldsymbol{x}\).

\[ Spa(\boldsymbol{x}^{adv}, \boldsymbol{x})=\ell_0(\boldsymbol{x}^{adv}, \boldsymbol{x})=\sum_{i=1}^{n}\one( x^{adv}_i-x_i) \]

Imperceptibility Property: Proximity

A good adversarial example is expected to introduce minimal perturbation, which can be obtained as the smallest distance to the original feature vector.

- \(\ell_p\) norm: common perturbation metrics

\[ \ell_p(\boldsymbol{x}^{adv},\boldsymbol{x})=\Vert\boldsymbol{x}^{adv}-\boldsymbol{x}\Vert_p =\begin{cases} \Bigl(\sum_{i=1}^n(x^{adv}_i-x_i)^p \Bigr)^{1/p}, & p\in\{1,2\}\\ \sup_{n}{\vert x^{adv}_n- x_n\vert}, & p \rightarrow \infty \end{cases} \]

Imperceptibility Property: Deviation

Perturbed vectors should be as similarly as possible to the distribution of the original data input.

- Mahalanobis distance (MD), which is a multi-dimensional generalization of \(\ell_2\) norm. Given an input vector \(\boldsymbol{x}\), a perturbed vector \(\boldsymbol{x}^{adv}\) and the covariance matrix \(V\), it is defined by: \[ \text{MD}(\boldsymbol{x}^{adv}, \boldsymbol{x})= \sum^n_{i=1}\sqrt{\frac{(x^{adv}_i-x_i)(x^{adv}_i-x_i)^T}{V}} \]

Imperceptibility Property: Sensitivity

- The notion of perturbation sensitivity for images is calculating the inverse of the standard deviation of the pixel region. (Luo et al. 2018)

- For tabular data, I adapted sensitivity as a normalized \(\ell_1\) distance between \(\boldsymbol{x}\) and \(\boldsymbol{x}^{adv}\) by the inverse of the standard deviation of all numerical features within the input dataset \(\mathbb{X}\).

\[ \begin{gathered} \text{std}(\boldsymbol{x}^{adv})= \sqrt{ \frac{ \sum^m ( x_{i}- \bar{x}_{i})^2 }{ m } }\\ \text{sen}(\boldsymbol{x}^{adv})=\frac{\vert x^{adv}_{i} - x_{i} \vert}{\text{std}(\boldsymbol{x}^{adv})}, \end{gathered} \]

Imperceptibility Property

Introduce domain knowledge into the evaluation of imperceptibility.

Immutability

- Attack methods should not change sensitive features from domain knowledge.

Feasibility

- Attack methods should maintain the soundness of perturbed feature values from domain knowledge.

:::

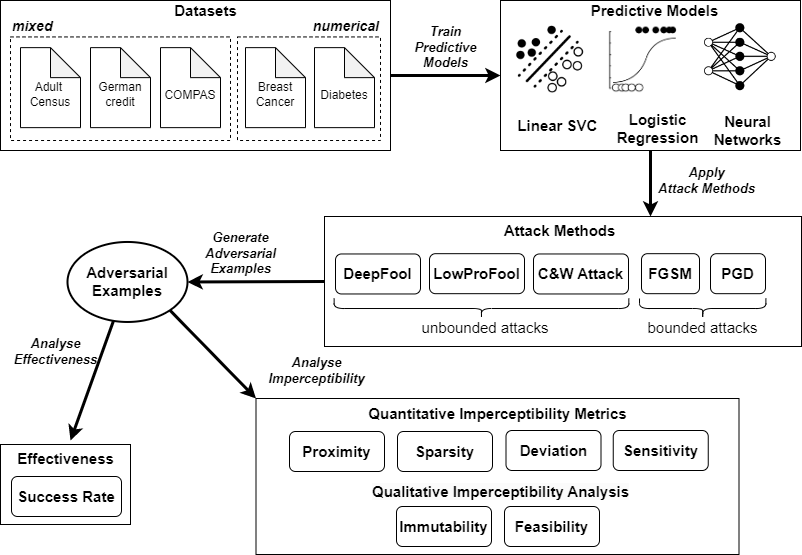

Experiment Pipeline

Model Accuracy

Similar and reasonable accuracy among three predictive models suggests comparability of the impact of adversarial examples across models.

| Datasets | LR | SVM | MLP |

|---|---|---|---|

| Adult | 0.8524 | 0.8532 | 0.8521 |

| German | 0.8125 | 0.8125 | 0.7969 |

| COMPAS | 0.7933 | 0.7976 | 0.8089 |

| Diabetes | 0.7578 | 0.7578 | 0.7266 |

| Breast Cancer | 0.9844 | 0.9844 | 0.9688 |

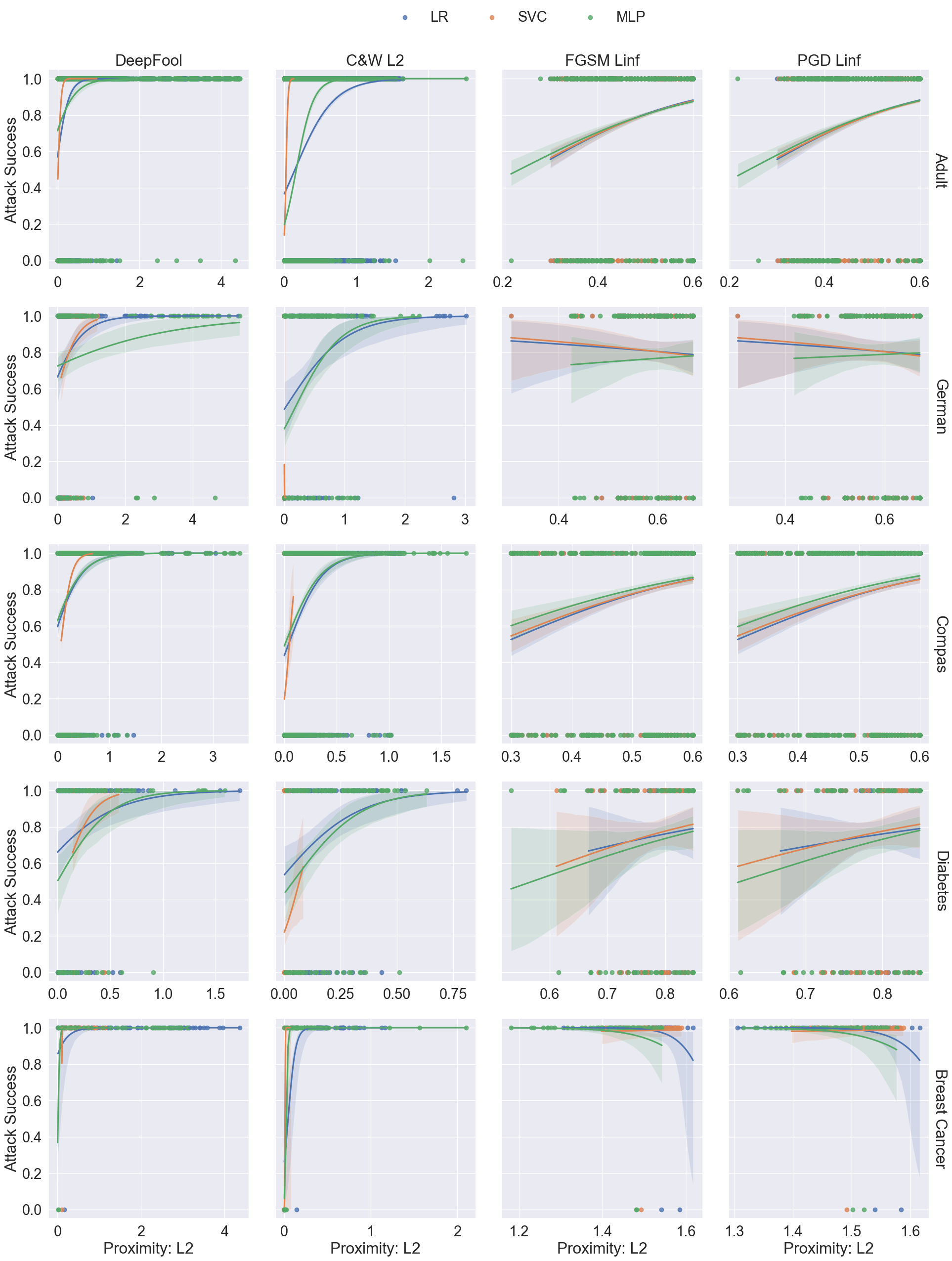

Finding 1

Phase 2.3

There is a trade-off between imperceptibility and effectiveness.

Finding 2

Optimisation-based attacks should be the preferred methods for tabular data.

Overall, C&W \(\ell_2\) attack obtains the best balance between imperceptibility and effectiveness.

C&W attack is designed to optimise a loss function that combines both the perturbation magnitude with distance metrics and the prediction confidence with objective function:

\[ \argmin_{\boldsymbol{x}^{adv}} \Vert\boldsymbol{x}-\boldsymbol{x}^{adv}\Vert_p + c\cdot z(\boldsymbol{x}^{adv}) \]

Finding 3

Adding sparsity as a term in the optimisation function is important for adversarial attacks on structured data.

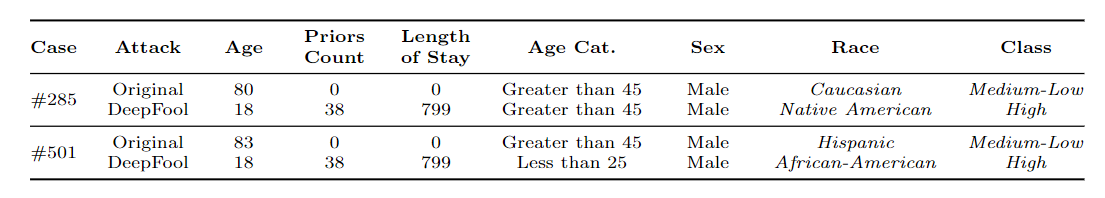

Qualitative Analysis: Immutability

- Likelihood of reoffending are flipped from

Medium-LowtoHigh; - but the feature values of

Raceare changed.

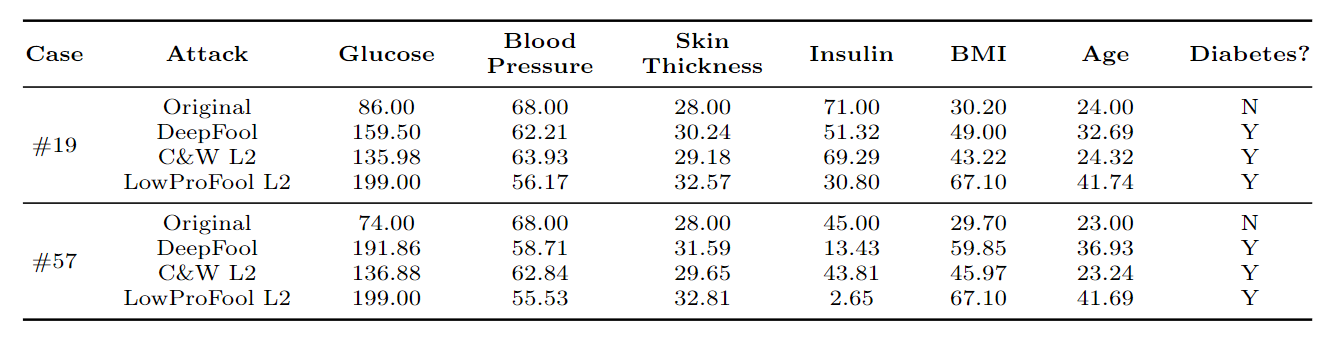

Qualitative Analysis: Feasibility

- From medical domain knwoledge, the feasible range of BMI is from 15 (Underweight - Severe thinness) to 40 (Obese Class III).

Limitations

- Limitations in evaluation settings

- Fixed perturbation budget \(\eta=0.3\)

- Limited num of datasets, models & attacks

- Side effects of one-hot encoding

- Categorical features similarity measure:

- Gower’s Distance (Gower 1971)

- Modified Value Difference Metric (Cost and Salzberg 1993)

- Association-Based Distance Metric (Le and Ho 2005)

- Categorical features similarity measure:

- Absence of ablation study for tabular data

Future Work

References

Thank you!

- github.com/ZhipengHe/Imperceptibility-in-Adversarial-attack

- slides.zhipenghe.me/2023-IS-DC

- with by Zhipeng “Zippo” HE with Reveal.js & Quarto

QUT Information Systems Doctoral Consortium 2023 (Stream B): Zhipeng “Zippo” HE